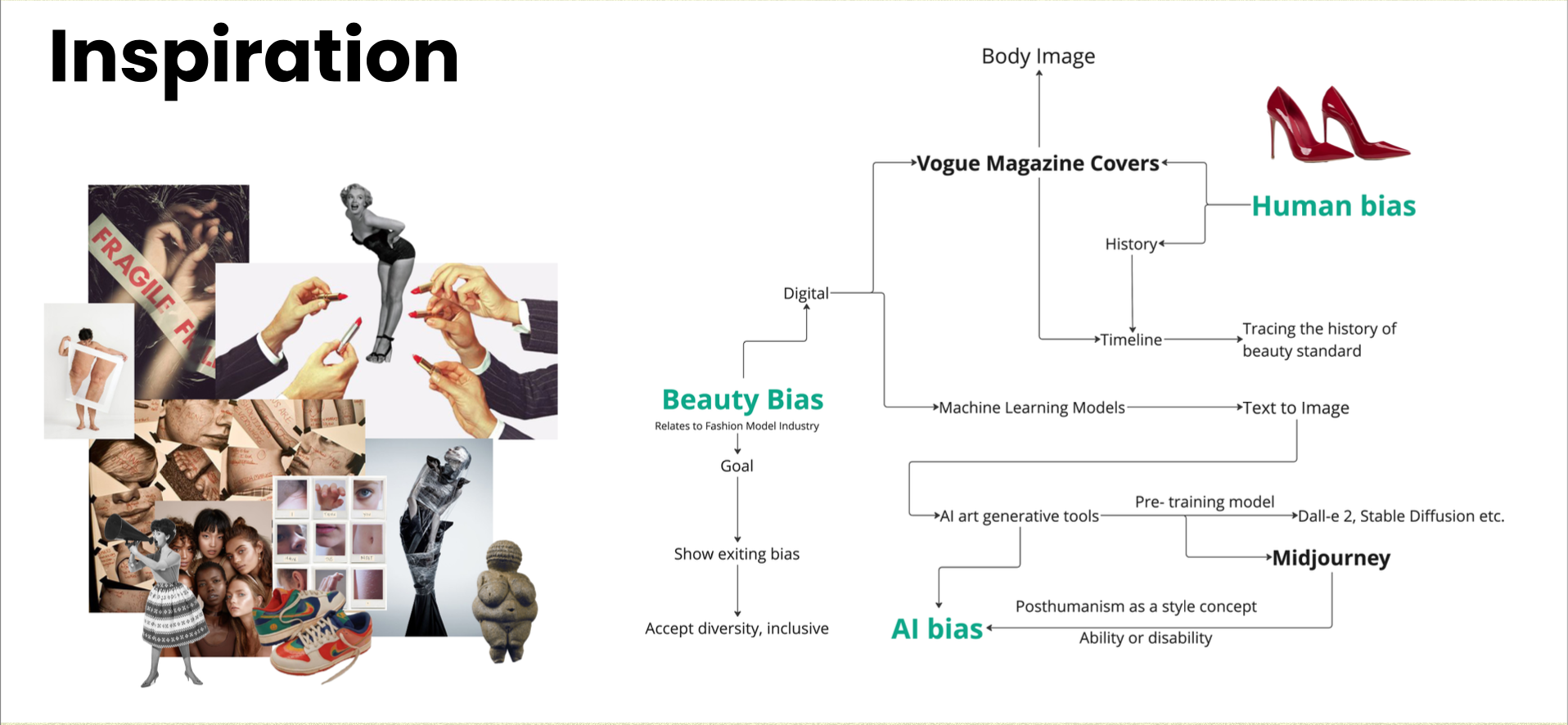

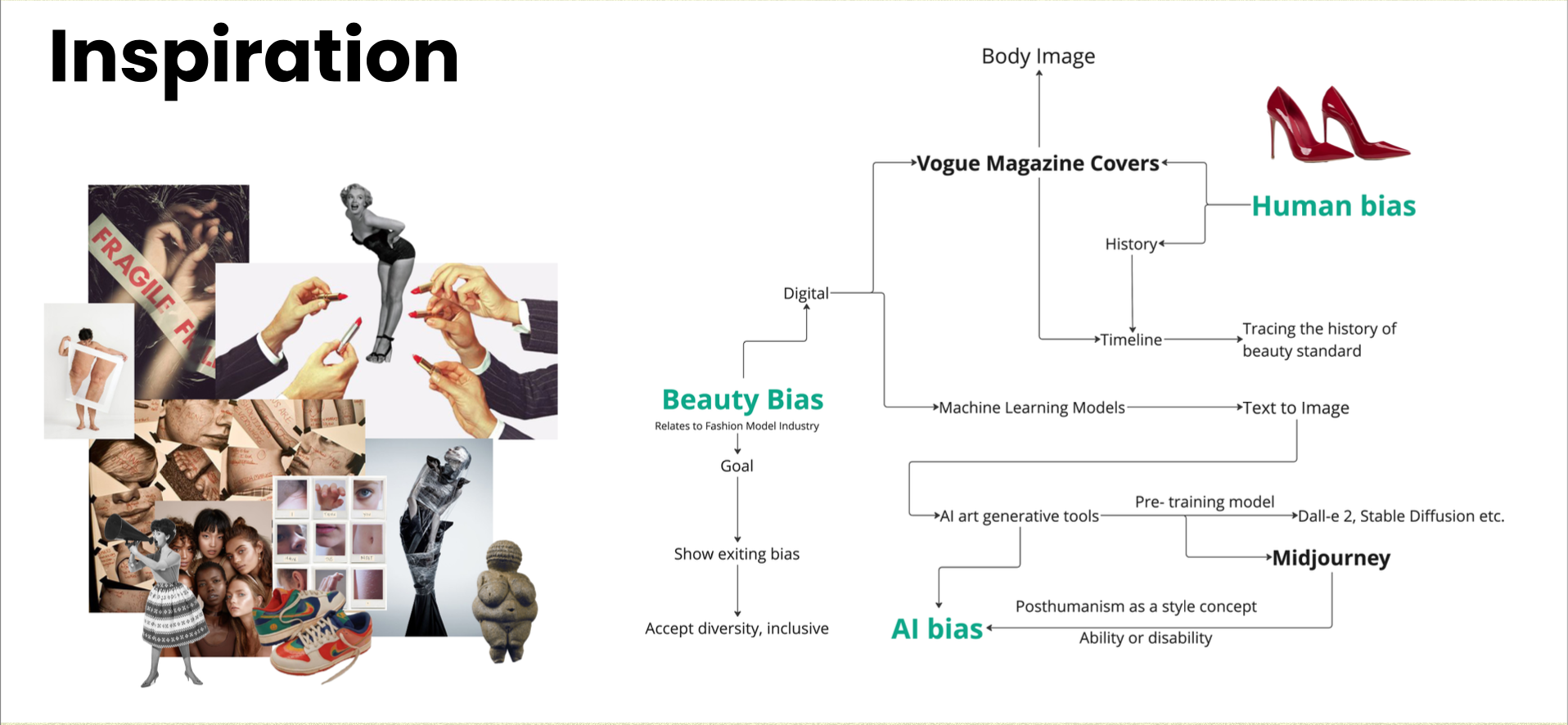

This project focus on how human bias about body image aesthetics

changes over time with a critical analysis of fashion media and the

capabilities of some existing generative artificial intelligence (AI)

tools. It aims to further shed light on the inherent bias in

artificial intelligence systems that manifest in the form of

artificially generated body conformity with potential sociocultural

implications. To demonstrates how to work with existing, pre-trained

artificial intelligence art generator tools to produce results that

reflect diverse beauty standards. Examine how the concept of

posthumanism can be introduced to the designer’s workflow in the form

of text prompts when collaborating with AI generative tools to create

inclusive fashion visuals.

Throughout history, the aesthetic of body image and the definition of

the “ideal body” have always changed depending on different eras and

social backgrounds. Beauty standards from ancient Egypt to the present

day have been shaped by cultures from both the East and the West.

People consistently describe the “ideal” body image as iconic based on

different cultural norms, perceptions, opinions, standards, and

preferences.

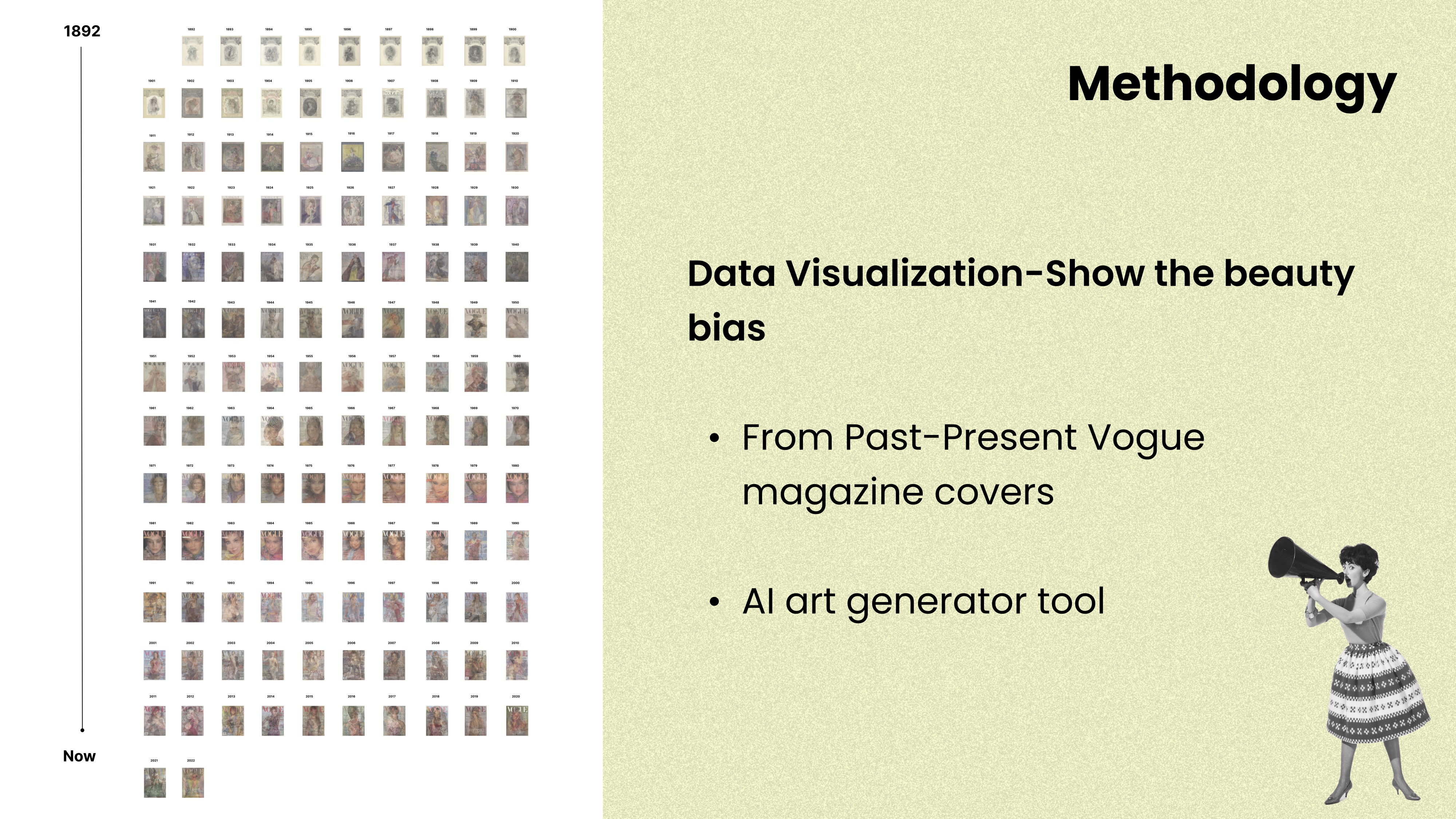

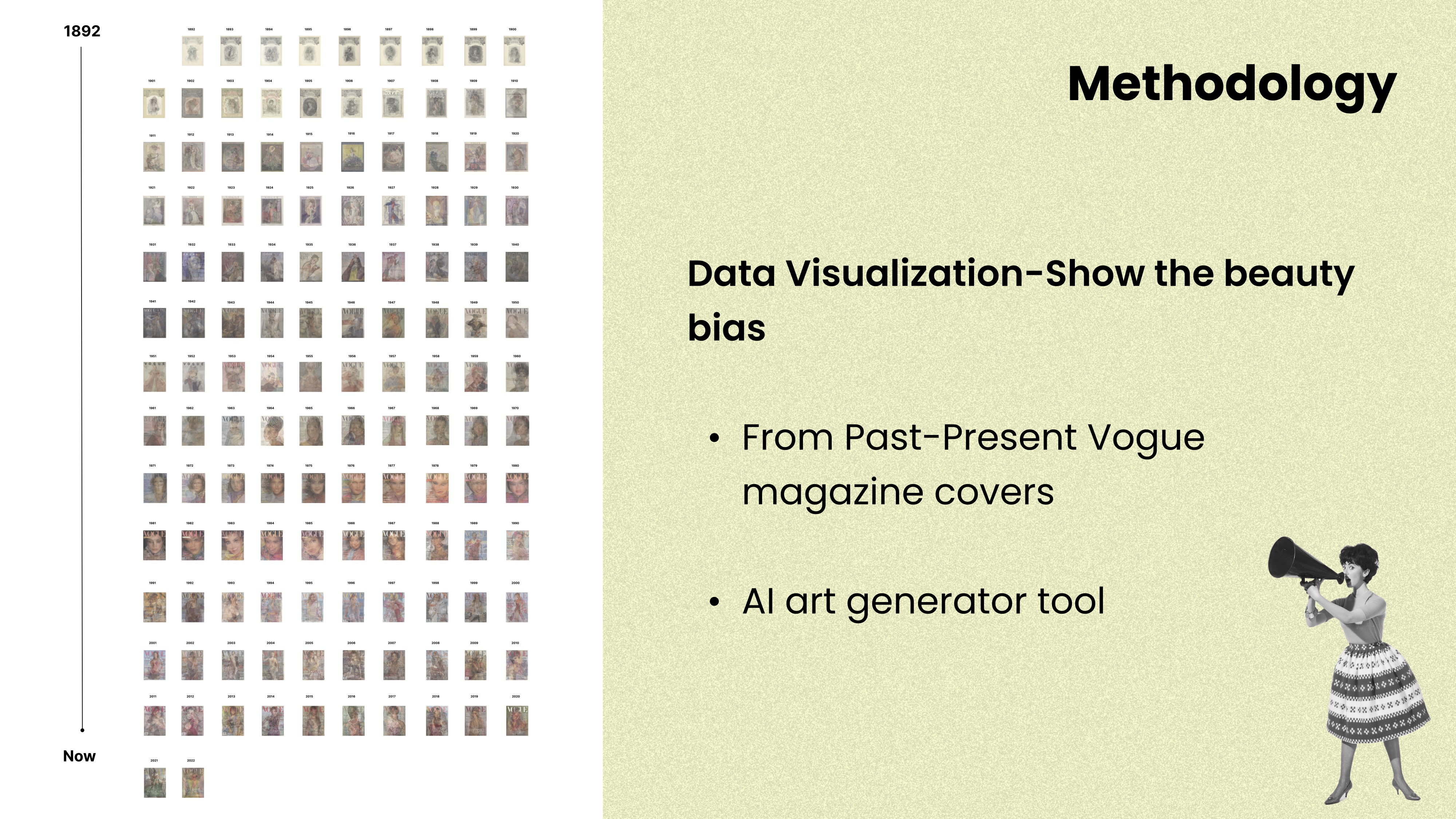

Nowadays, the world revolves around data, numbers, information, and

forms. Using techniques to make data visible is what data

visualization does. The information can contain various types of

content. It is not limited to numbers. Image dataset means images can

also be a dataset to collect, categorize and analyze. Generative

Adversarial Networks (GANs) are a type of generative modeling that

utilizes deep learning techniques, including convolutional neural

networks (CNNs), as well as an adversarial training process to

generate new data that resembles the original training data.

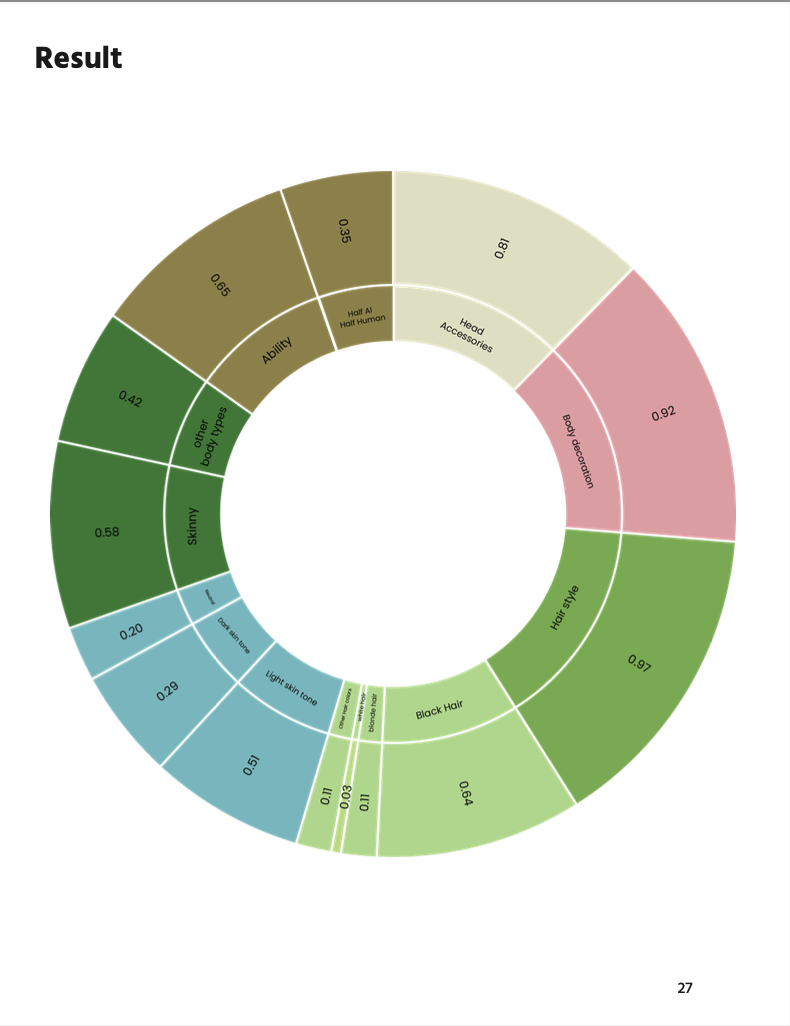

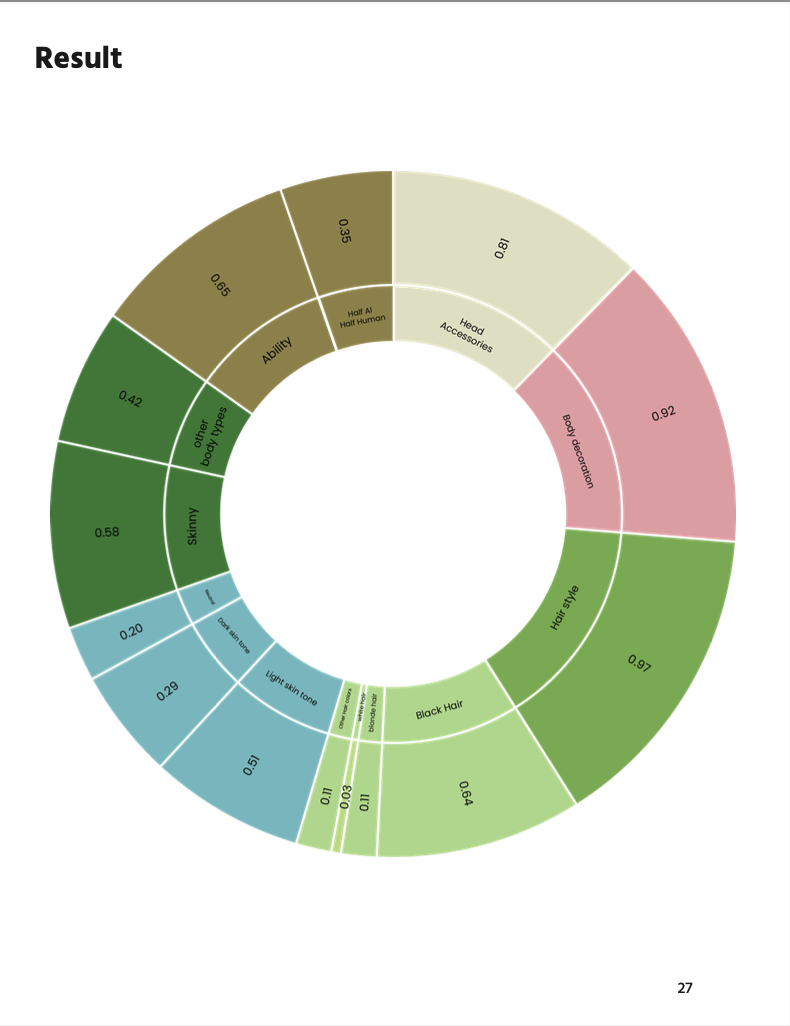

Based on my hypothesis about artificial intelligence beauty bias, the

design approach of my experiment is to test this beauty bias and find

method to improve this experience, or in other word to say, find a way

to find the fairness, diversity and inclusive. In contrast to my

original plan of manually analyzing body images for beauty, I

incorporate existing machine-learning models to assist with the

critique process. This approach still aims at identifying and

addressing beauty bias. However, the questions have become more

focused on specific aspects such as skin tone, body shape, or clothing

texture.

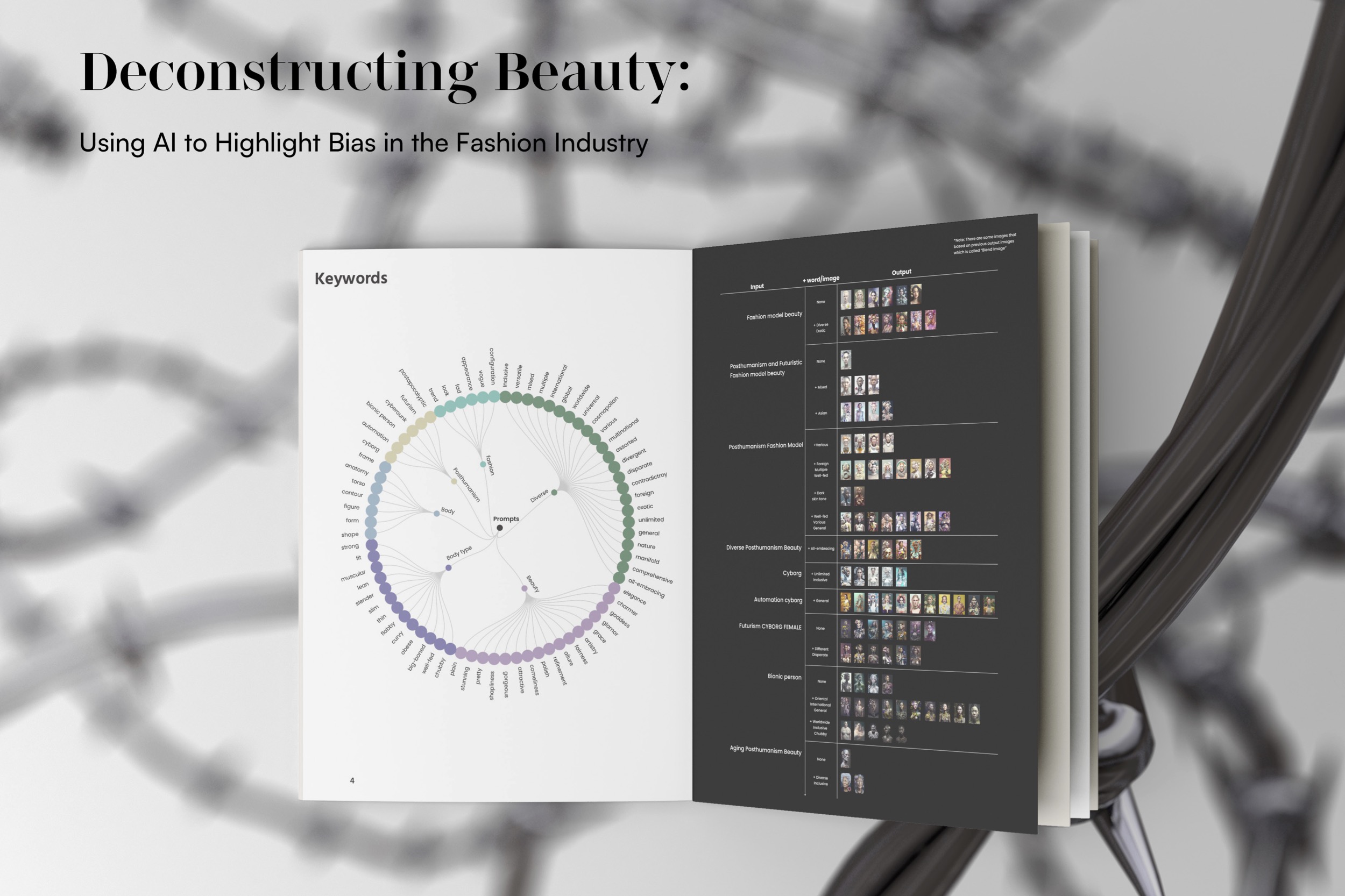

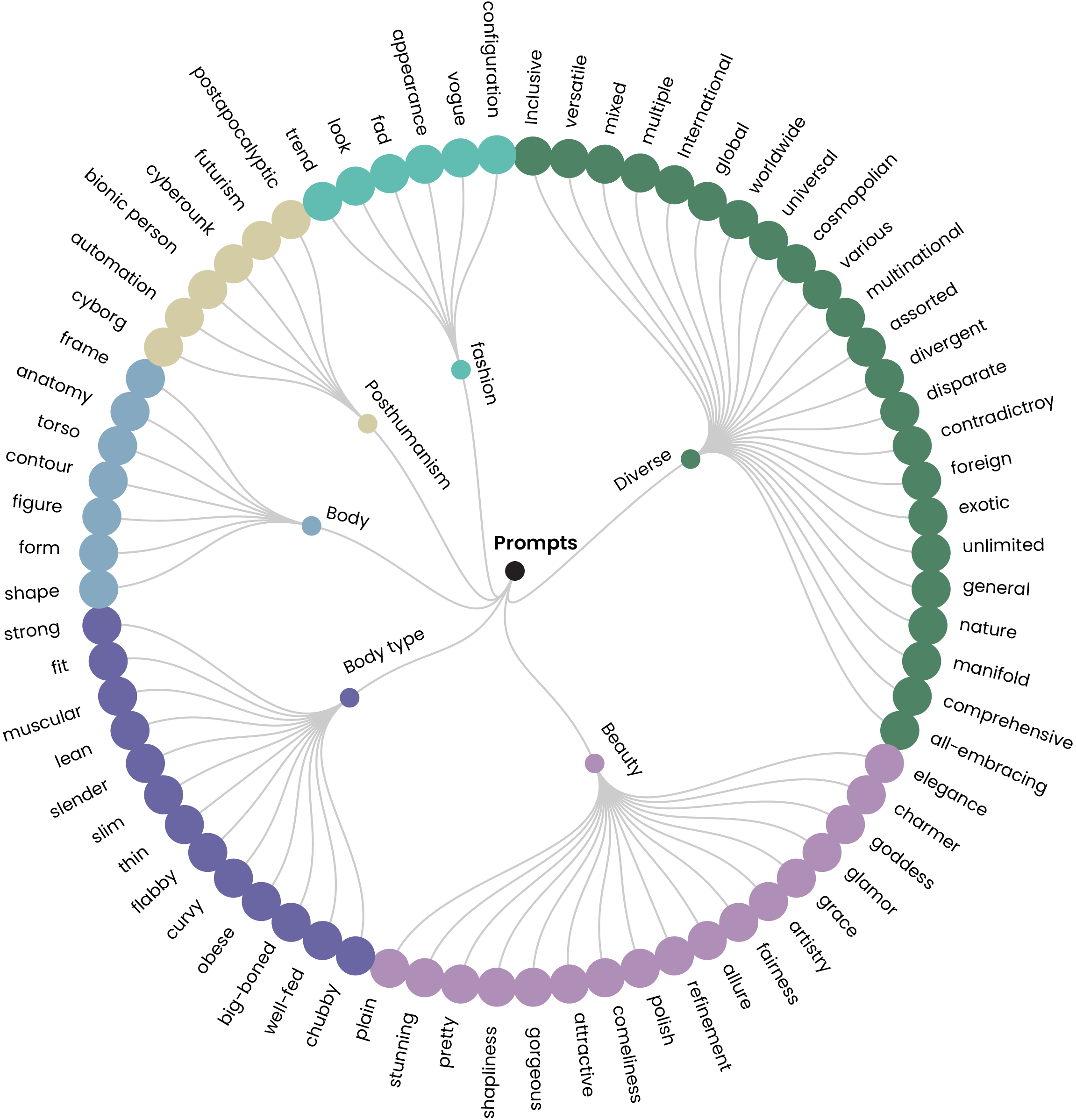

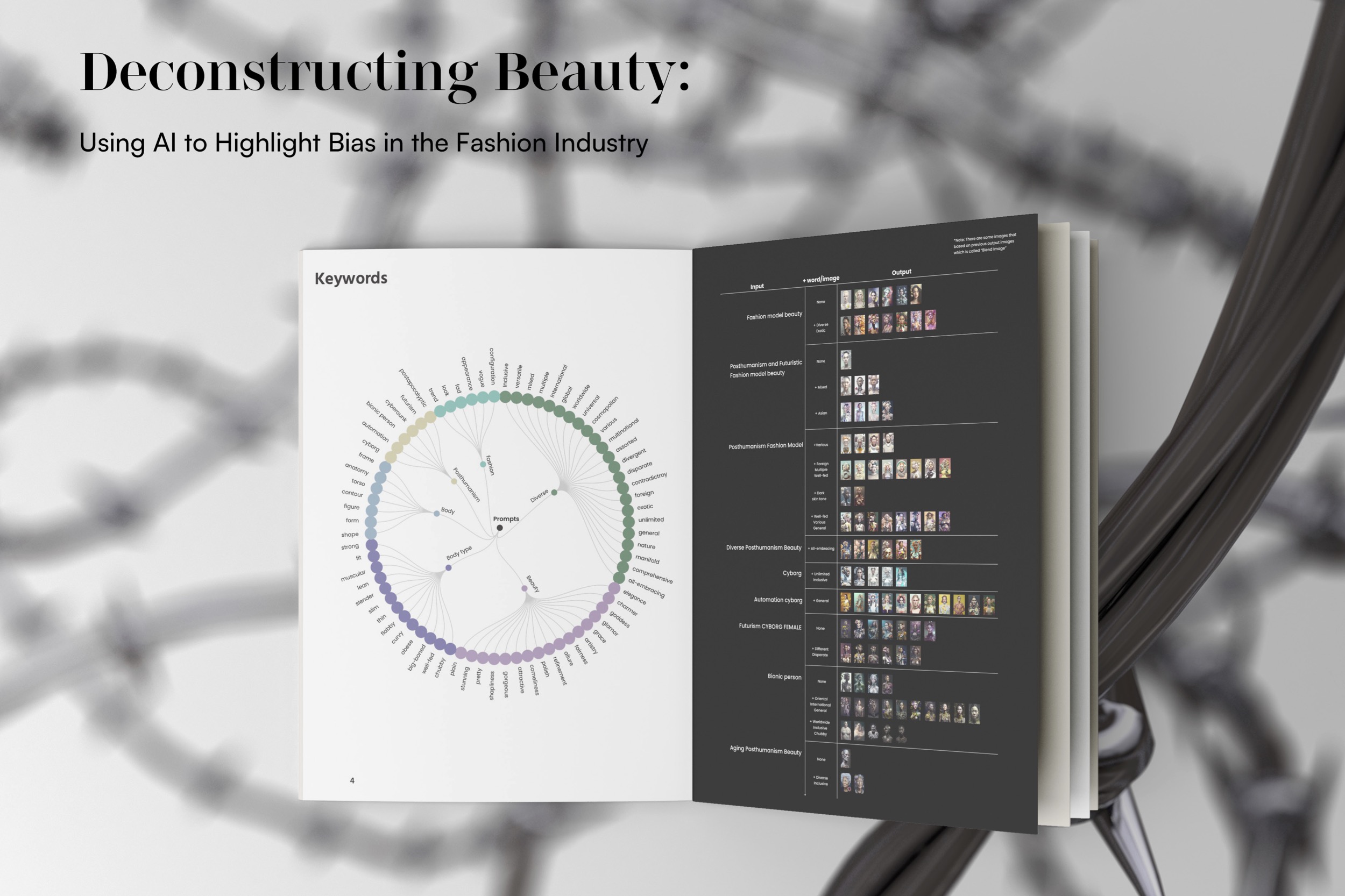

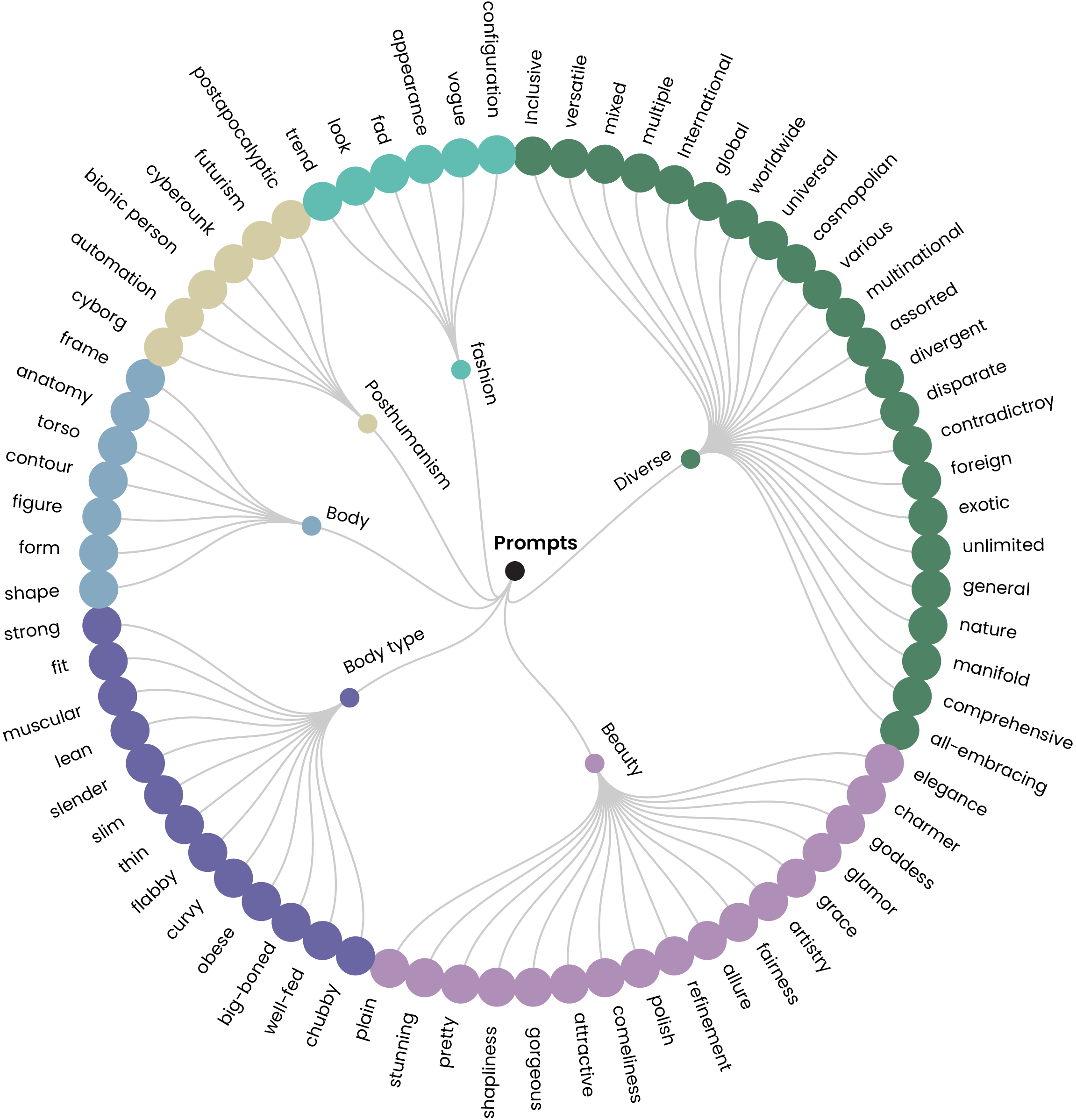

The aim of this data visualization is to provide a guideline about the

prompt word used on Midjourny.

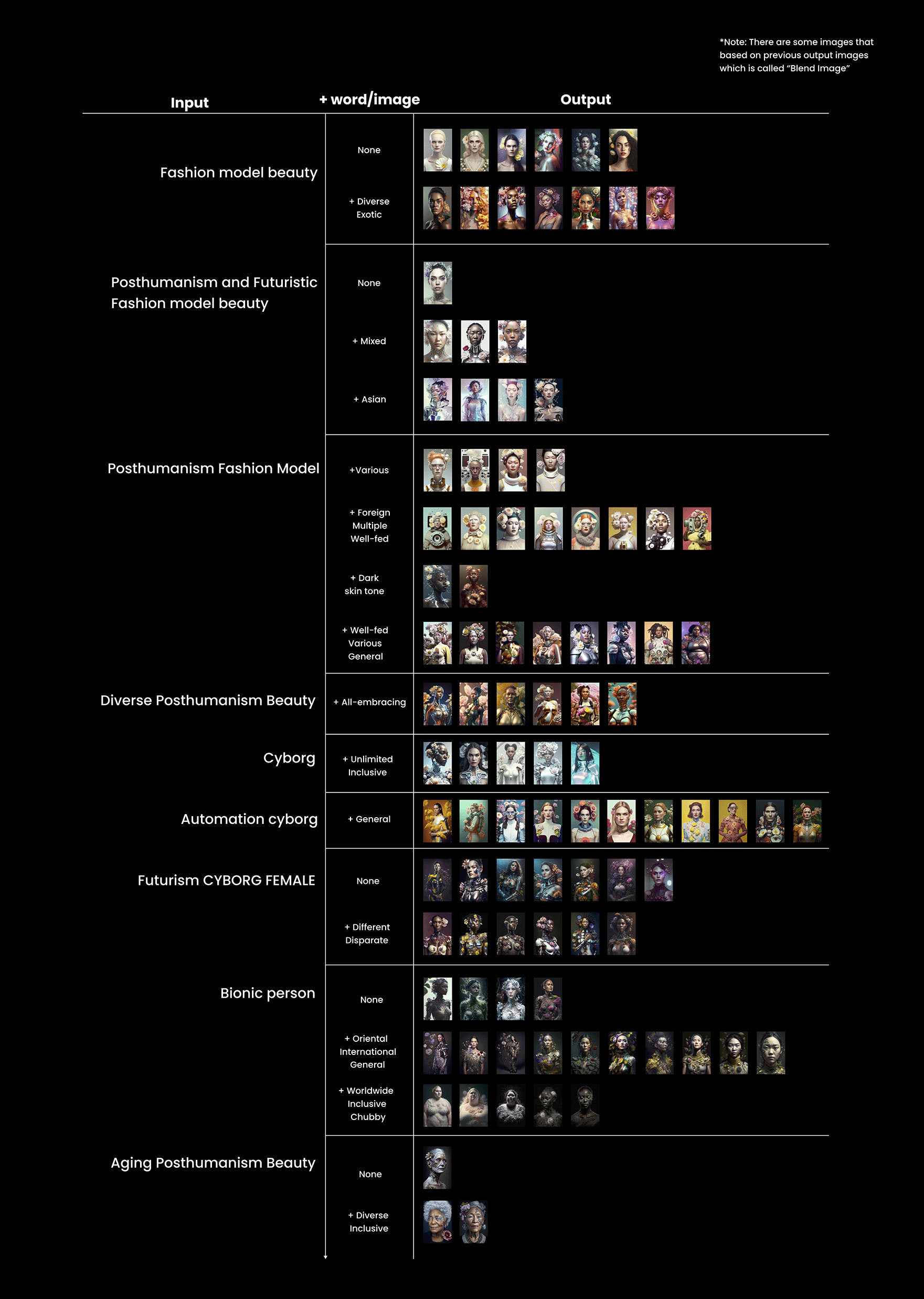

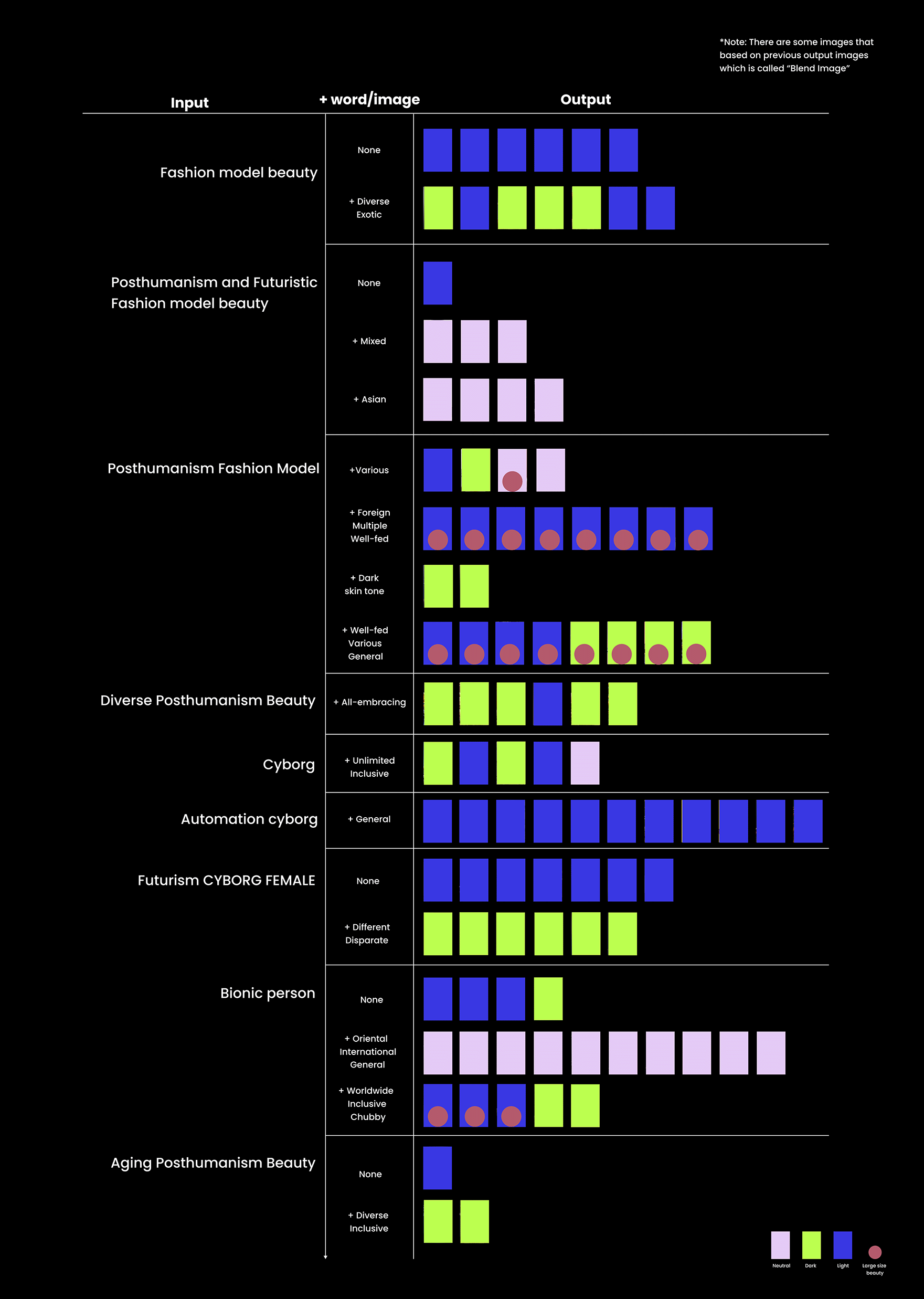

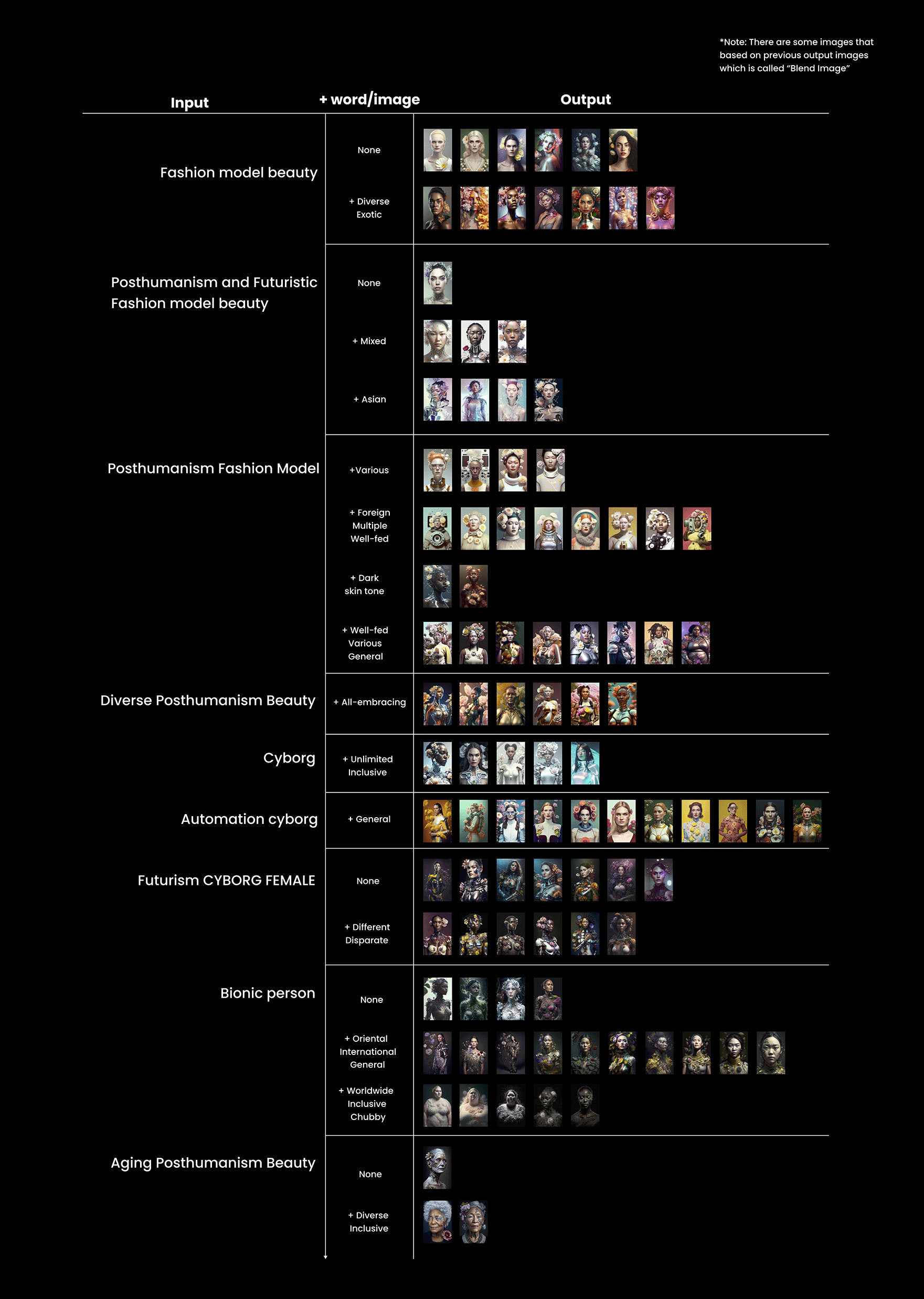

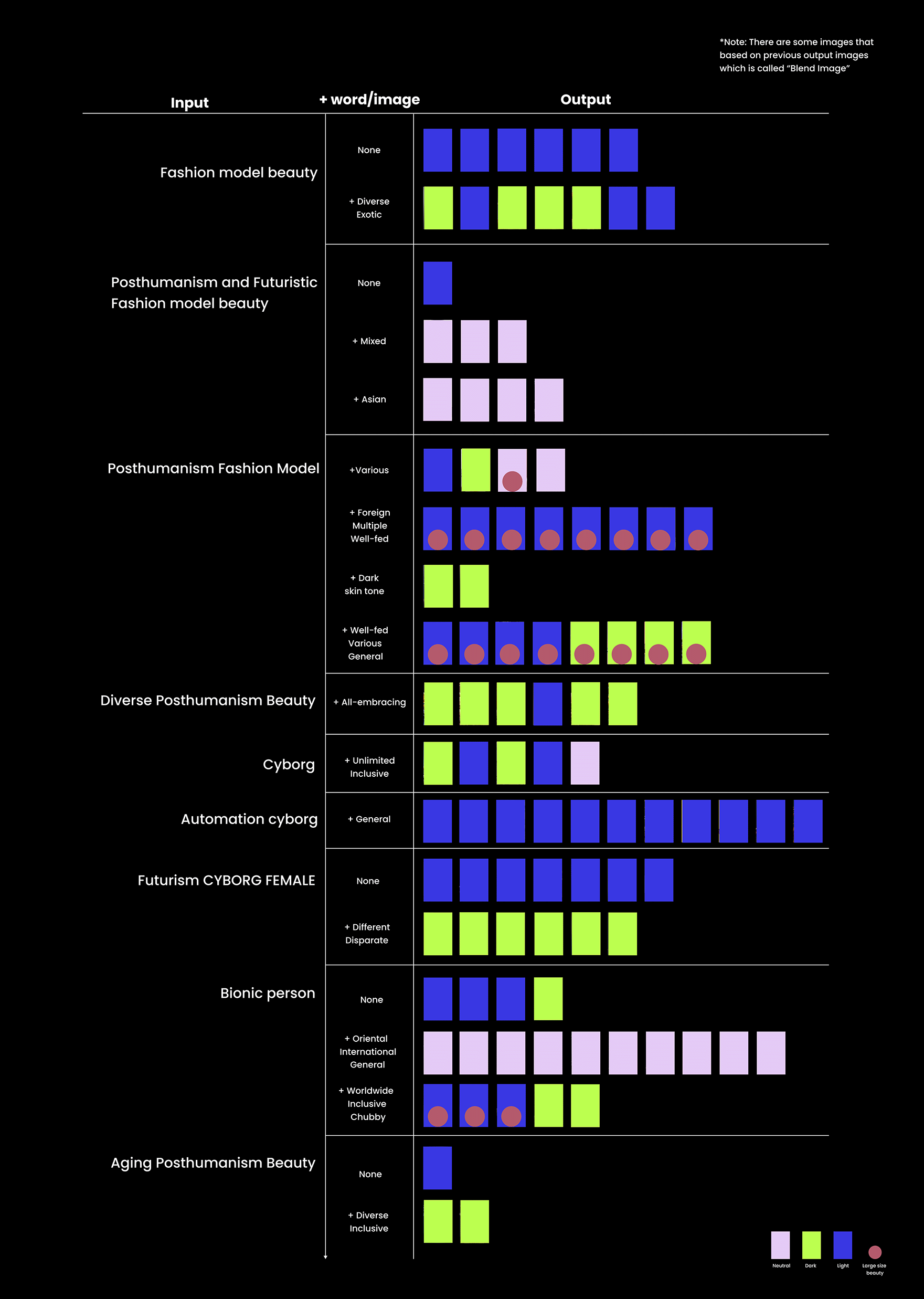

The primary prompt was “Fashion model beauty” and “Posthumanism and

Futuristic” as an art style. Keep inputting the command to add and

remove synonyms and related key terms to see what could emerge after

changing. Also, set the background as flowers to distinguish

differences and the content styles.

I tested the listed keywords from Circular dendrogram, as these are

cascade related. However, only some of the keywords produced the

desired results. The images were reorganized and rearranged so that

viewers can observe and compare the differences between these

artworks. Images and prompts are produced after filter layers. In the

picture, (Figure. 34) shown here, see some initial input words and

phrases such as “fashion magazine mode” and “Posthumanism fashion

model beauty,” “Posthumanism Beauty.” By adding related words like

diverse, and aging, we get other aspects of beauty. So, discover the

images’ color, skin tone, and body shape as the primary focus.

The Midjourney itself exerts stereotypes, and it somehow displays

specific “disrespectful” photos without the ability to identify them.

But all of this comes from learning from its original initial dataset

of thousands of photos. In other words, AI bias is also human bias.

The process and result of AI learning from an extensive database of

images to produce new art paintings is an innovative form of art

creation. The artists can filter image details through input and

output. Therefore, humans remain the most crucial factor and the main

source of decision-making. With prompt keywords to input, we need to

be specific on what, when, and how. The keywords should be as specific

as possible to avoid Image bias and stereotypes. Because the AI do not

have imagination on create image.